One of the pinnacles of natural development is the eye. This incredibly complex sensor is one of the most exceptional pieces of design in the world and so far has been almost impossible to replicate accurately. However, neuromorphic sensors come very close to achieving the same level of complexity as the human eye. Neuromorphic systems have been a major developmental area in electronics and surveillance in the past decade, and a growing number of systems have been developed that can acquire, process and store data at phenomenal speeds.

In this article, we’ll examine the science and technology of neuromorphic sensor systems, including their history and their characteristics. We’ll also take a look at the applications that could benefit from neuromorphic technology.

Are neuromorphic systems as effective as biomechanical systems found in nature? And does science really have the ability to improve on the natural blueprint? Let’s take a closer look at neuromorphic technology and the future of sensory information gathering.

What are neuromorphic sensors?

Also known as ‘event cameras’, ‘dynamic vision sensors’ or ‘silicon retina’, neuromorphic sensors are bio-inspired sensors that electronically mimic the principles of biological sensory neurons in the brain.

The technology forms part of a larger approach to advancing computer technology. Neuromorphic engineering takes the human brain as its inspiration. The object is to process signals in the same way that the brain does when it receives data through the cones and rods within a human eye. The spur for this advancement has been the development of the artificial neural network model (ANN). The aim is to equal the processing power of the human brain, and then go beyond it in terms of information gathering, processing and storage.

This may sound like science fiction, and certainly has been explored in some of the more imaginative renditions of machine-becomes-man. However, the reality is that neuromorphic systems are here and while they’re still at the early stages of development and there are still obstacles to overcome, they represent a huge leap forward.

The history of neuromorphic sensor technology

The initial idea of neuromorphic sensor technology dates back further than you might think, to the publication in 1989 of a book entitled “Analog VLSI and Neural Systems”, written by Professor Carver Mead at Caltech. This led to one of Professor Mead’s students, Misha Mahowald, developing her PhD thesis on neuromorphic chips. It didn’t take long for tech giant Logitech to pick up on the potential of this technology in 1996, setting up R&D departments to delve deeper into the potential of neuromorphic sensor applications.

Logitech and Caltech were not the only groups interested in this emerging technology, and since the mid-1990s several organizations have worked to commercialize neural systems, with the Dynamic Visual Sensor (DVS) developed in 2008.

As with all 1.0 generation technology, some worked, some didn’t. But what this flurry of activity did do is pave the way for a more in-depth examination of the potential and applications of neuromorphic technology further down the line.

Characteristics and features of neuromorphic sensors

The DVS has been superseded by advances that followed quickly from the initial release of the DVS, including the proposal of a silicon retina design those outputs asynchronous address-event representations or ARS. Utilising a 128×128 pixel grid and time-coded addresses, the retina can encode and transmit evens between neurons and pixels to create a neural network.

DVS itself has been relegated by the development of two key ‘camera’ applications – DAVIS or the Dynamic and Active-pixel Vision Sensor in 2014, and the Asynchronous Time-based Image Sensor or ATIS in 2011. The advantage of these 2.0 versions is that they offer a greater level of resolution and contrast. While the pixel level is at least double, it does have a higher dynamic range, increasing its versatility and opening it up to a wider range of applications.

Several generations of neuromorphic sensors have since been developed by civilian organisations around the world, and in recent times new advances have been focused on overcoming the limitations of DAVIS sensor systems by combining full pixel value (RGB), single-bit, multi-bit and area events into one cohesive network. This will expand the next generation of sensors to create far greater quality and full-colour images.

As well as offering high temporal resolution, event cameras can respond much faster to environmental changes, thanks to their low latency. The lack of a traditional shutter means that latency is measured in hundreds of microseconds.

The ability of neuromorphic sensors to capture both bright and dark visual images with no loss of detail makes them particularly useful, reducing the risk of over or under-exposed images where details become lost. The low level of power required to operate event cameras makes them suitable for a range of devices such as small drones, extending the operating period as the event camera is not such a drain on the drone’s battery.

Application for the technology – what are the possibilities?

This advanced technology is on the cutting edge of electronics, but in the real world, what are its applications? The global market for neuromorphic computing and networking systems is predicted to grow significantly in the coming years, particularly with the advent of AI and machine learning technology.

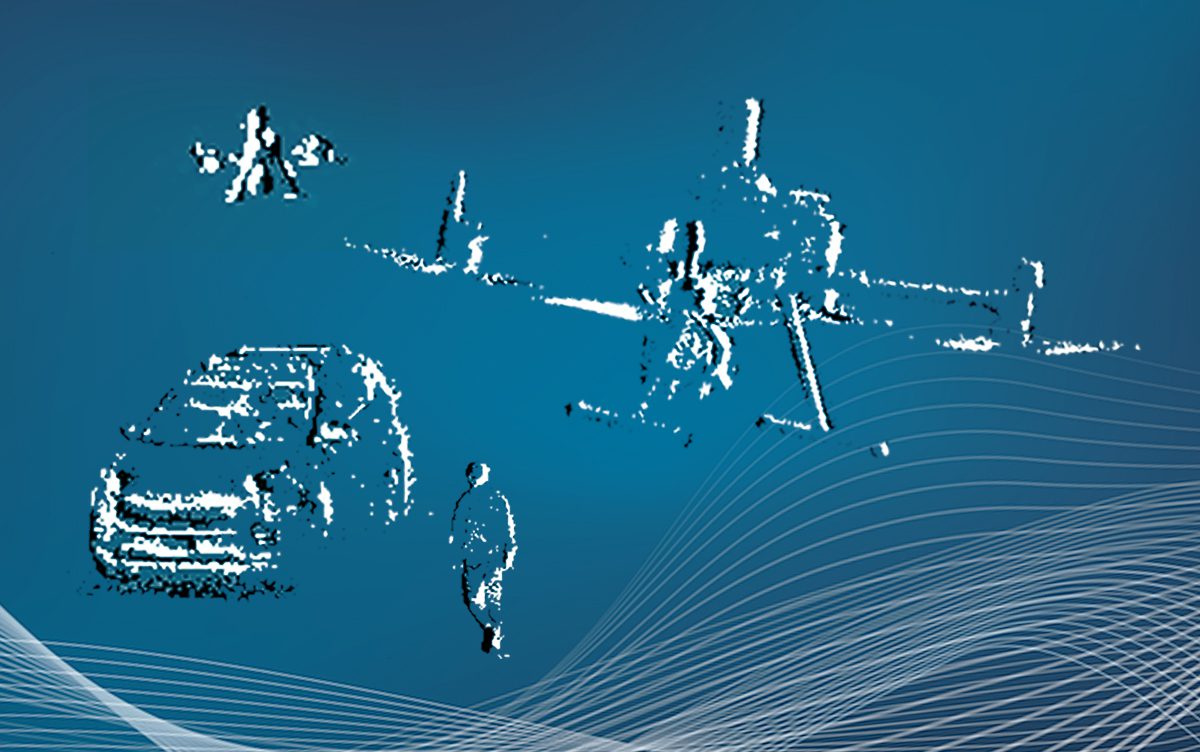

Multiple markets could benefit from neuromorphic systems, and we are already seeing it applied in the automotive industry with self-driving cars. Incorporated into their systems for real-time object detection and recognition, the use of neuromorphic sensors in the automotive industry could bring forward the advent of driverless vehicles on our roads, as well as similar pilot-assist systems in the aviation industry.

The use of neuromorphic technology obviously has a big role to play in the future of robotics and automation, especially with their ability to detect subtle changes in the environment, allowing them to identify objects with greater accuracy. This could in turn lead to autonomous robots that can move freely within any space without having to ‘map’ it out first.

The improved latency with which neuromorphic sensors can process information makes them ideally suited to machine learning applications, taking AI to the next level in terms of its ability to predict and react, as well as enhancing its decision-making capabilities. Neuromorphic sensors can process data from multiple sources at the same time, accelerating detection, analysis, up analysis and processing to new levels.

The importance of these networks in the advancement of AI and augmented reality-based systems cannot be understated and could revolutionize the whole of the robotics field at a stroke.

The potential of neuromorphic systems is extraordinary and could advance a wide range of industries far beyond their current capabilities.

The application of neuromorphic sensors in surveillance and multi-spectral imaging

Event cameras that incorporate neuromorphic sensors represent a paradigm shift in the move towards more accurate machine vision. Put simply, they are bringing us closer than ever to the level of the human eye, but with added advantages in that the ability to ‘filter out’ any unimportant changes in the intensity of background pixels renders a clearer, more precise image.

The problem with frame-based cameras is that an excess of unnecessary information is gathered alongside the vital data. This requires additional filtering, either by a machine or by a highly trained human. In a real-world scenario such as in an aerial surveillance platform, this means additional and complex diagnostic equipment, or a real-time live feedback to a static ground base.

What the next generation of neuromorphic systems and sensor-based technology does is to bypass the need for additional diagnostics. Sometimes, the human eye can filter out too much of the static background and a vital piece of information can be lost.

Event cameras are the ‘Goldilocks’ solution, capturing the most important information of both the dynamic parts of an image as well as the static background, but without overloading the system with unnecessary data that could restrict the operator’s ability to assess and react on the information provided.

Event cameras produce a far more detailed composite image that can be analysed immediately by compatible software and multispectral systems in the field to provide immediate and vital operational information. Because of the compact nature of event cameras, this level of data analysis can make surveillance, search and rescue, and other applications more practical. Alternatively, the information that they gather can be relayed back to a static base for deeper analysis by both AI and human investigators.

What event cameras represent is a move away from traditional frame-based cameras and compact, user-friendly equipment that delivers new levels of performance and efficiency.